scripts/evaluation folder#

This package contains the evaluation scripts for the project.

plot_training_metrics.py script#

A script that plots metrics to show how the training went.

plot_embedding_edge_performance.py script#

A script that plots the edge performance (edge purity and efficiency) as a function of the maximal squared distance.

plot_embedding_best_tracking_performance.py script#

A script that plots the metric saved during the learning.

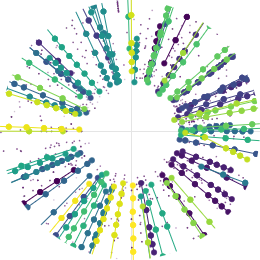

plot_embedding_edge_plane_diff.py script#

A script that plots statistics about the edges build by the embedding stage.

plot_embedding_n_neigbhours.py script#

A script that plots statistics about the edges build by the embedding stage.

plot_gnn_edge_performance.py script#

A script that plots the metric saved during the learning.

plot_gnn_triplet_performance.py script#

A script that plots the metrics saved during the learning.

plot_gnn_best_tracking_performance.py script#

A script that plots the metric saved during the learning.

evaluate_allen_on_test_sample.py script#

A script that evaluates the tracking performance of Allen for these test samples.

- scripts.evaluation.evaluate_allen_on_test_sample.evaluate_allen(test_dataset_name, detector=None, event_ids=None, output_dir=None, **kwargs)[source]#

Evaluate the track finding performance of Allen in a test sample.

- Parameters:

test_dataset_name (

str) – Name of a test datasetevent_ids (

Union[List[str],List[int],None]) – Event IDs to consider. If not provided, all available are consideredoutput_dir (

Optional[str]) – Output directory where the save the reports and figures**kwargs – Other keyword arguments passed to

scripts.evaluate.evaluate()

- Return type:

TrackEvaluator

evaluate_etx4velo.py script#

A script that runs the performance evaluation of the ETX4VELO pipeline, using MonteTracko.

- Return type:

DataFrame

- scripts.evaluation.evaluate_etx4velo.compute_plane_stats(df_hits_particles, df_particles)[source]#

Compute variables related to the numbers of hits w.r.t. the planes.

- Parameters:

df_hits_particles (

DataFrame) – Dataframe of hits-particles association. Must have the columnsevent_id,particle_idandplane.df_particles (

DataFrame) – Dataframe of particles. Must have the columnsevent_idandparticle_id.

- Return type:

DataFrame- Returns:

Dataframe of particles with the new columns.

- scripts.evaluation.evaluate_etx4velo.evaluate(df_hits_particles, df_particles, df_tracks, allen_report=True, table_report=True, plot_categories=None, plotted_groups=['basic'], min_track_length=3, matching_fraction=0.7, output_dir=None, detector=None, suffix=None, cure_clones=False, timestamp=False)[source]#

Runs truth-based tracking evaluation.

- Parameters:

path_or_config – path to the Exa.TrkX configuration file.

min_track_length (

int) – minimum length of a track to be considered in the evaluation.whether_to_plot – whether to plot histograms.

allen_report (

bool) – whether to report in Allen categories using the Allen reporterplot_categories (

Optional[Iterable[Category]]) – Categories to plot on. By default, the one-dimensional histograms are plotted for the reconstructible tracks in the velo, and the long electrons. In order not to plot, you may set this variable to an empty list.plotted_groups (

Optional[List[str]]) – Pre-configured metrics and columns to plot. Each group corresponds to one plot that shows the the distributions of various metrics as a function of various truth variables, as hard-coded inplot(). There are 3 groups:basic,geometryandchallenging.

- Return type:

TrackEvaluator- Returns:

object containing the evaluation.

- scripts.evaluation.evaluate_etx4velo.evaluate_partition(path_or_config, partition, suffix=None, output_dir=None, **kwargs)[source]#

Evaluate the track finding performance in a given partition.

- Parameters:

path_or_config (

str|dict) – pipeline configuration dictionary or path to a YAML file that contains itpartition (

str) –train,valor the name of a test dataset.suffix (

Optional[str]) – Suffix to add to the end of the files that are producedoutput_dir (

Optional[str]) – directory where to save the reports and figures**kwargs – Other keyword arguments passed to

evaluate()

- scripts.evaluation.evaluate_etx4velo.perform_evaluation(trackEvaluator, detector, allen_report=True, table_report=True, plot_categories=None, plotted_groups=['basic'], output_dir=None, suffix=None, timestamp=True)[source]#

Perform the “default” evaluation of a sample, after matching.

- Parameters:

trackEvaluator (

TrackEvaluator) –montetracko.TrackEvaluatorobject, output of the matchingallen_report (

bool) – whether to generate the Allen reporttable_report (

bool) – whether to generate the table reportsplot_categories (

Optional[Iterable[Category]]) – Categories to plot on. By default, the one-dimensional histograms are plotted for the reconstructible tracks in the velo, and the long electrons. In order not to plot, you may set this variable to an empty list.plotted_groups (

Optional[List[str]]) – Pre-configured metrics and columns to plot. Each group corresponds to one plot that shows the the distributions of various metrics as a function of various truth variables, as hard-coded inplot(). There are 3 groups:basic,geometryandchallenging.output_dir (

Optional[str]) – Output directory where to save the report and the plotssuffix (

Optional[str]) – string to append to the file name of the reports and figures produced.

compare_allen_vs_etx4velo.py script#

A script that compares the performance of ETX4VELO and Allen in a test sample.

- scripts.evaluation.compare_allen_vs_etx4velo.compare_allen_vs_etx4velo_from_trackevaluators(trackEvaluators, trackEvaluator_Allen, names=None, colors=None, categories=None, metric_names=None, columns=None, test_dataset_name=None, detector=None, output_dir=None, suffix=None, path_or_config=None, same_fig=True, with_err=True, **kwargs)[source]#

- scripts.evaluation.compare_allen_vs_etx4velo.compare_etx4velo_vs_allen(path_or_config, test_dataset_name, categories=None, metric_names=None, columns=None, same_fig=True, output_dir=None, lhcb=False, allen_report=False, table_report=False, suffix=None, with_err=True, compare_trackevaluators=True, **kwargs)[source]#

- scripts.evaluation.compare_allen_vs_etx4velo.compare_etx4velo_vs_allen_global(paths_or_configs, names, colors, test_dataset_name, categories=None, metric_names=None, columns=None, same_fig=True, lhcb=False, allen_report=False, table_report=False, suffix=None, compare_trackevaluators=True, **kwargs)[source]#